5 Reasons Why Your Data Pipeline Is Silently Failing

Data Pipeline is a set of data processing elements connected in series, where the output of one element is the input of the next. Data pipelines are commonly used to automate workflows that involve data for analysis, reporting, or other uses. Just like a factory assembly line but for your data where each station performs a specific task, transforming raw data into valuable business insights.

When a data pipeline fails, it disrupts the flow, leading to incomplete, delayed, or corrupted data, this can have severe consequences, particularly for businesses that rely on real-time data analytics or automated decision-making systems.

Why is Your Data Pipeline Failing?

1. Data Quality Issues

Data pipelines often fail due to issues like missing values, duplicates, or inconsistent formats. These problems might not trigger immediate alerts but can build up over time, affecting the integrity of the data and leading to inaccurate analyses.

2. Schema Changes in Upstream Systems

When the structure of upstream data changes such as renaming fields or modifying data types it can break downstream processes that rely on specific data schemas. These changes can go unnoticed until the data pipeline fails to produce expected results.

3. Data Drift

Over time, the statistical properties of data may change, a phenomenon known as data drift. This can lead to outdated models or inaccurate predictions, as the data used to train models no longer reflects real-world patterns.

4. Data Silos and Inconsistent Data Sources

In large organizations, data can be siloed across different systems or departments, leading to incomplete or inconsistent data when combined in a pipeline. This can result in skewed analytics and flawed business insights.

5. Performance Bottlenecks

As the volume of data grows, pipelines can experience performance slowdowns. If resource limitations such as CPU, memory, or network bandwidth aren’t addressed, processes may fail without immediate alerts, leading to slow processing or incomplete data deliveries.

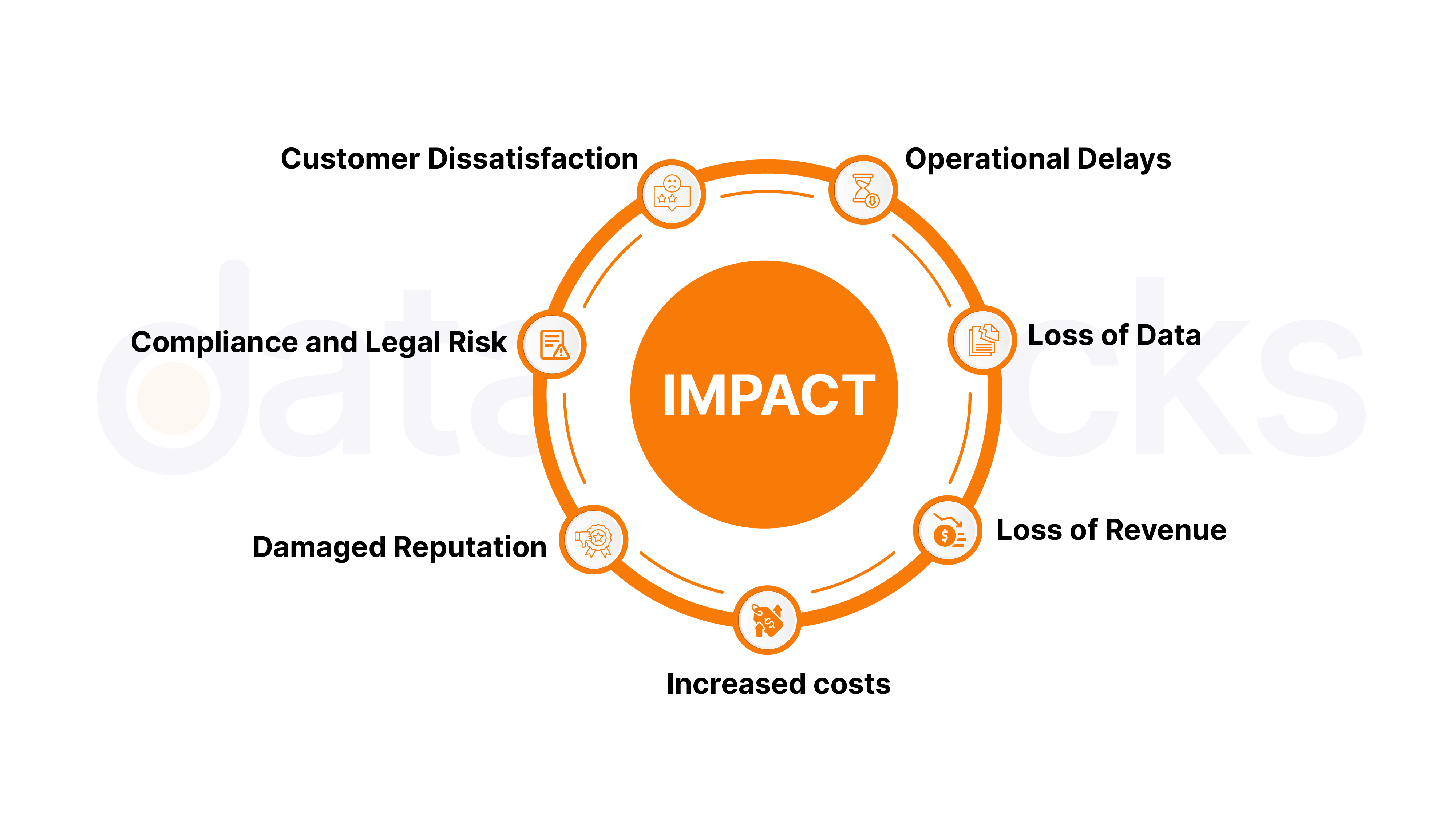

How does it impact?

Ways to prevent your data pipelines from breaking

Data Quality Checks

- Automated Validation: Implement validation rules throughout the pipeline to check for missing values, duplicates, and incorrect formats. These checks should be automated at each stage of data processing to ensure data integrity.

- Real-time Monitoring: Continuously monitor data quality and set up automated alerts to flag any inconsistencies or issues before they affect downstream analyses.

Automated Schema Validation

- Schema Monitoring: Set up tools that automatically detect schema changes, such as field renaming or data type modifications. This allows for real-time schema validation and prevents errors in downstream processes.

- Schema Evolution: Ensure that any schema changes are properly documented and integrated into the pipeline, allowing for smooth adaptation to new data structures.

Monitoring Data Drift

- Continuous Monitoring Tools: Implement tools that track the statistical distribution of incoming data. These tools will detect data drift by comparing current data with historical baselines.

- Model Adjustment: Regularly retrain models or adjust algorithms based on data drift insights to maintain accuracy and relevance in your analysis.

Data Integration and Visibility

- Centralized Data Platform: Integrate data from various sources into a unified platform, reducing the effects of data silos. This will ensure data consistency and improve visibility across departments.

- Data Lineage: Establish clear visibility into the flow of data, including transformations and dependencies. This allows teams to track data and identify any disruptions or inconsistencies quickly.

Performance Monitoring and Scalability

- Real-time Performance Tracking: Use monitoring tools to track performance metrics like CPU, memory, and network usage. This allows you to spot resource bottlenecks and optimize the pipeline.

- Auto-scaling: Implement auto-scaling solutions to dynamically allocate resources based on data volume, ensuring the pipeline handles increased loads without slowing down or failing.

How Data Observability Platforms Can Help

Modern data observability platforms like Datachecks provide comprehensive, unified solutions to challenges such as schema changes, data drift, and pipeline performance. These platforms ensure data integrity and system reliability by enabling real-time tracking, automated anomaly detection, and comprehensive quality controls for monitoring data pipelines.

Let’s explore how implementing data observability is helping modern data-driven companies.

Netflix Case Study

Challenge:

Netflix processes 1.3 trillion events daily, with complex schema changes and a need for reliable data to power algorithms.

Solution:

Netflix implemented Metacat, an observability platform that offers automated schema management, real-time tracking, and anomaly detection.

Results:

- 90% fewer schema-related incidents

- 60% faster issue detection

- 45% reduction in pipeline failures

- 35% improvement in team productivity

Uber Case Study

Challenge:

Uber processes millions of daily rides, dynamic pricing, and real-time payments with 100+ petabytes of data.

Solution:

Uber built a custom observability platform for real-time data monitoring, AI-powered anomaly detection, and lineage tracking.

Results:

- 75% less data downtime

- 50% faster problem resolution

- 99.99% pipeline reliability

- 40% fewer data-related customer issues

These examples perfectly demonstrate the importance of data observability in automating performance monitoring and preventing error-prone manual tasks. By maintaining high-performing data ecosystems, businesses can optimize operational resilience and enhance customer satisfaction

In a nutshell

Safeguarding the integrity of your data pipelines is not just a one-off task but an ongoing commitment. Employing these strategies can significantly mitigate the risks associated with data pipeline failure and recover more gracefully when they do.

Remember: The most expensive data pipeline failure is the one you don’t know about. Investing in proper monitoring and mitigation strategies isn’t just about preventing failures — it’s about ensuring your data infrastructure can scale reliably with your business needs.

.svg)