Data Migration QA Needs To Move Beyond Scripts and SQL

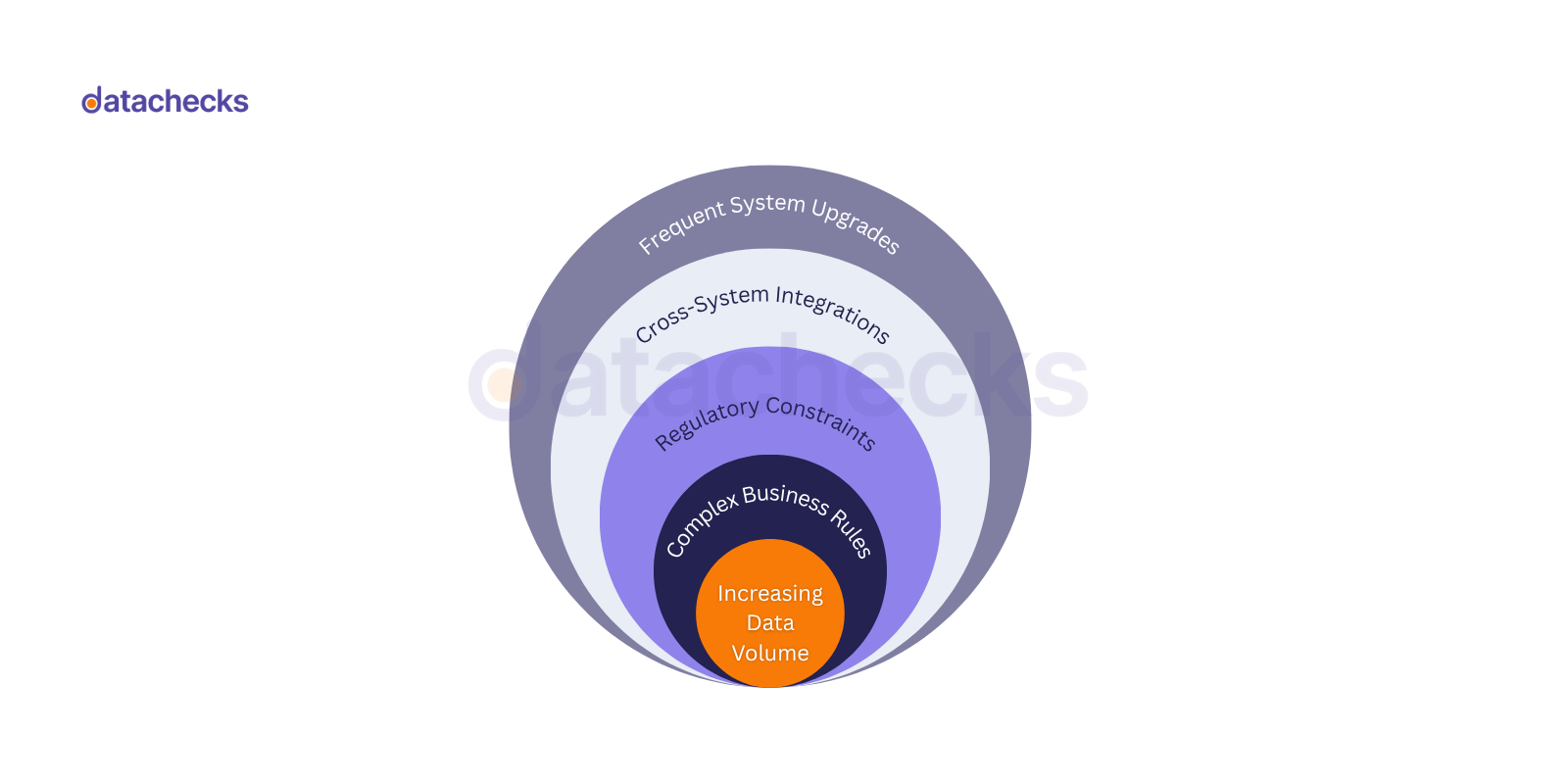

To ensure successful data migration, QA needs to move beyond solely relying on scripts and SQL queries due to the inherent limitations of these traditional methods in modern, complex data environments.

Here's why traditional approaches are often insufficient and what advanced solutions offer:

Limitations of Scripts and SQL in Data Migration QA

Scalability and Data Volume

Manual or script-based testing struggles immensely with the "staggering" and ever-increasing volume of data in modern organisations. Validating parity across hundreds, thousands, or millions of tables with simple queries or scripts is time-consuming and often impractical within project timelines.

Human Error and Accuracy

Manual testing is highly prone to human error, including oversights, inaccuracies, and inconsistencies, especially when dealing with complex data structures. While human intuition is valuable, it can miss subtle errors, such as a currency changing while a numerical value remains the same, which automated tools are better equipped to detect. Automated validation has been shown to reduce error detection time significantly and improve accuracy rates compared to manual processes.

Limited Coverage and Depth

Traditional methods often result in insufficient test coverage, meaning critical data issues or rare data combinations might go undetected. It is difficult to standardise and consistently replicate tests across different datasets or migration projects using manual or basic script-based approaches.

Lack of Real-time Monitoring

Scripts and basic SQL queries do not offer real-time monitoring or alerting capabilities during the migration process. This absence of immediate feedback can lead to delays in identifying and resolving issues, potentially disrupting business operations or causing data degradation.

Complexity of Data Transformations and Inconsistencies

Data migrations frequently involve complex data transformations, cleansing, and mapping rules to align with new system requirements. Manually validating these transformations is challenging, and simple scripts may not be sophisticated enough to effectively implement or verify complex logic. Issues like "false friends" (functions appearing identical but yielding different results) or varying data type handling across databases (e.g., how empty strings or nulls are treated) can easily be overlooked.

Resource Intensiveness and Cost

Data quality teams can spend a significant portion of their time (e.g., 45%) on manual data verification tasks, leading to operational inefficiencies and reduced productivity. Relying on manual efforts can incur substantial costs and delays. The global cloud migration services market's projected growth rate of 24% annually from 2024 to 2032 indicates a rapidly expanding need for efficient migration strategies that manual methods cannot sustain.

Absence of Stakeholder Confidence

Proving data parity and ensuring stakeholder sign-off can be incredibly challenging if there's no robust, verifiable method to demonstrate that data remains unchanged between legacy and new systems.

The Role of Advanced Solutions

To overcome these limitations, data migration QA is increasingly leveraging sophisticated tools and methodologies:

Intelligent Automation and AI/Machine Learning (ML)

◦ Automated data validation frameworks handle quality issues automatically, significantly reducing manual effort and improving data quality with better speed and cost-effectiveness.

◦ AI-led and AI-enabled migration accelerators can dynamically create target data models, schemas, and optimise system resource usage, accelerating migration timelines (e.g., by 40% in one case study) and reducing time-to-market.

◦ Advanced RPA systems integrate AI, ML, Natural Language Processing (NLP), Natural Language Generation (NLG), and computer vision to create intelligent automation solutions that adapt to changing input conditions and go beyond simple step recording. These can detect complex semantic inconsistencies and predict potential data issues with high accuracy.

◦ Machine learning-based data profiling solutions show promise in handling complex data ecosystems and improving data quality metrics significantly.

Comprehensive Validation and Reconciliation Platforms

◦ Specialised platforms, such as Acceldata's observability platform and Datagaps' DataOps Suite, offer end-to-end data testing, robust data profiling, quality checks, data mapping, transformation tools, and comprehensive reconciliation capabilities.

◦ Tools like Datafold's Migration Agent employ "data diffing" (row-by-row comparisons) to precisely validate data parity between source and target systems, even across different databases, identifying changes within seconds for vast datasets. Reconciliation is a critical test that, for large datasets, necessitates automation.

◦ These tools can provide 100% record coverage for data accuracy, overcoming the resource limitations of manual efforts.

Real-time Monitoring and Observability

◦ Modern solutions provide continuous, real-time data monitoring and anomaly detection, allowing for immediate identification and rectification of issues. This proactive approach reduces the mean time to detection and prevents potential data quality issues from impacting downstream systems.

Data Governance and Data Contracts

◦ Establishing strong data governance mechanisms is pivotal to successful migration projects. This includes defining data quality standards, ownership, and implementing data lineage and traceability.

◦ Data contracts define the structure, format, and specifications of data to be migrated, acting as a crucial benchmark for automated validation. They foster standardisation, improve data quality, enhance efficiency, and streamline error checking in ways that simple scripts cannot achieve systematically.

Structured Methodologies and Dedicated Tools

◦ Adopting a stringent process model with well-defined quality assurance measures across pre-migration, migration, and post-migration phases is essential.

◦ Utilising specialised frameworks and libraries, such as those built on Apache Spark (e.g., Deequ, Datagaps ETL Validator), enables high-performance parallel execution for validating billions of records and provides robust built-in testing capabilities.

In conclusion, while scripts and SQL queries may offer a basic starting point, the scale, complexity, and critical nature of data in modern enterprises demand a shift towards more sophisticated, automated, AI-driven, and platform-based QA solutions to ensure successful, accurate, and efficient data migrations

.svg)